One name has been a total game-changer in the fast-moving world of modern application deployment which is Kubernetes. This powerful orchestration tool has totally transformed how we think about scaling and deploying our containerized applications. In this blog post, we will introduce the base concepts of Kubernetes, understand its architecture, and take an in-depth look at why it is one of the most important tools in the stack of tech companies worldwide.

Kubernetes Introduction

At the core, Kubernetes is an open-source platform for container orchestration. But, what does that mean?

Let’s break it down:

- Containers: Containers are the base idea of modern application deployment. Just to make it easier to understand, a container is a lightweight, portable package that contains everything needed to run a piece of software: code, runtime, libraries, and dependencies. This great efficiency arises from the fact that, in contrast to traditional virtual machines, containers virtually bundle the operating system.

- Orchestration: Orchestration in computing and software development represents all the cues, controls, and management automatically set, that define numerous individual tasks, actions, processes, or other application services that are supposed to run in a system or application. Orchestration provides aggregate sequencing and execution of the elements to achieve optimal results.

Containers vs Virtual Machines (VMs)

Both containers and virtual machines isolate an environment for applications, but their approach is fundamentally different. Even as VMs virtualize hardware resources like CPU, memory, and storage to realize workloads in isolation from each other, containers virtualize only the operating system, hence allowing lightweight and very portable deployment. This is a core difference, making containers more efficient, more scalable, and very well the best way to accommodate the modern microservices architecture.

To compare it more efficiently, let’s break it down into some key points.

– Containers:

- Lightweight: Containers share the host OS kernel, making them super efficient.

- Fast startup: Containers spin up in milliseconds.

- Isolation: They provide process isolation but share the OS.

- Ideal for microservices architecture.

– VMs:

- Heavier: VMs come with their own OS, which can be resource-intensive.

- Slower startup: VMs take longer to boot.

- Strong isolation: Each VM is like a mini-computer.

- Great for legacy apps and complex workloads.

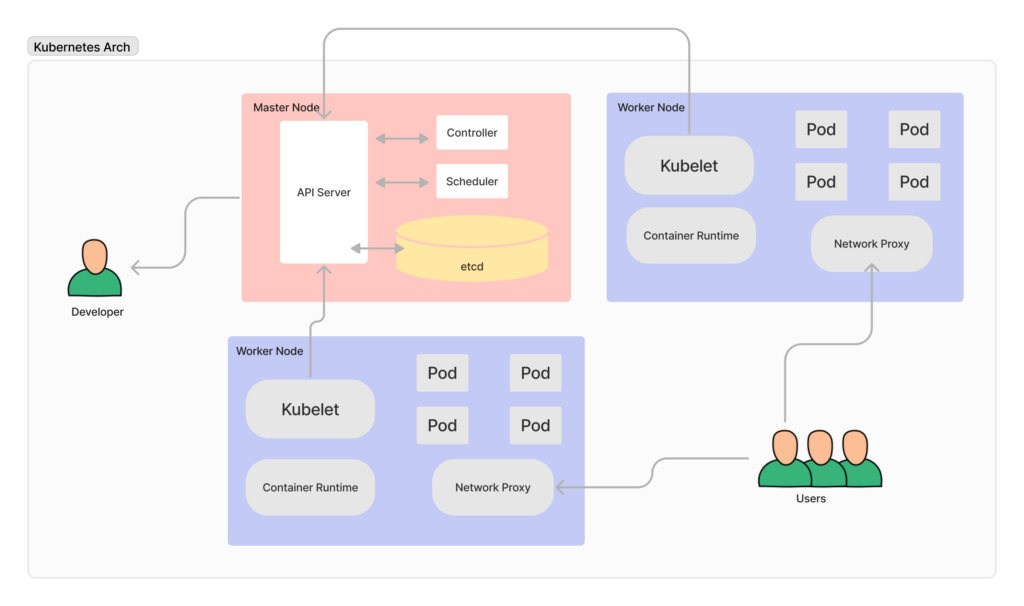

Kubernetes Architecture

Before we fully understand Kubernetes, it’s first necessary to learn about its architecture. Essentially, Kubernetes forms a cluster of nodes, wherein a node represents a different point in the application ecosystem. These nodes can be either physical or virtual machines and form the basis of a Kubernetes infrastructure.

Kubernetes manages the nodes’ workloads through encapsulated units called pods. A pod is a single instance or running occurrence of an application and contains one or more containers. Kubernetes groups related containers in a pod; this way, the applications become manageable in an easier and more resource-efficient way.

But over and above these core capabilities, Kubernetes provides rich abstractions for services, deployments, and namespaces that abstract deploying and managing applications. A good case is services: they abstract a hard process in this new challenge of interpretation, which relates to how pods can communicate transparently with each other.

We know those are some heavy words that we are using, but let’s make it simple with some key points of the Kubernetes architecture components.

– Master Node:

- The brain of the cluster.

- Components: API server, etcd (our distributed database), controller manager, and scheduler.

- The master orchestrates everything like a maestro leading an orchestra.

– Worker Nodes:

- The muscle.

- Components: Kubelet (communicates with master), kube-proxy, and container runtime (Docker, containerd, etc.).

- Worker nodes host our containers.

– Pods:

- The smallest deployable units.

- Pods group one or more containers that share storage and network.

- Like a cozy apartment where containers live together.

– Services:

- The traffic cops.

- Services expose pods to the world (or just the cluster).

- Load balancer, NodePort, and ClusterIP are all part of the service squad.

– ReplicaSets and Deployments:

- The cloning experts.

- Ensure a desired number of identical pods are running.

- Handle scaling, rolling updates, and rollbacks.

In order to understand it more clearly, refer to the diagram below:

As we discussed what is Kubernetes, and how it works; let’s discuss why we should use Kubernetes and how it simplifies deployment work.

Kubernetes vs Traditional Deployment

With such kind of a background, there is a big deal about the response to the current rise of containerization technologies like Docker, which is revolutionizing the preparation, delivery, and execution of applications.

However, applications get more complex by the day and more distributed over their hosts, one needs an effective and scalable container orchestration platform. Enter Kubernetes, which is opposed in sharp contrast to traditional deployment methods.

Traditional Deployment

The common, traditional practice of deploying an application is as follows.

What developers used to do was pack the whole application along with all its dependencies, libraries, and configurations inside some kind of deployable artifact such as ZIP or a WAR file. Then that artifact would be deployed into a single server, or occasionally maybe into a cluster of servers, with the requirement that, for each server, all its instances were to be manually configured and managed.

This worked quite well with small-scale applications, but when applications started to grow in both size and distribution, they quickly became unwieldy and error-prone. Scaling out to meet increased demand typically meant manually provisioning and configuring individual servers, an error-prone and time-consuming process. Further, traditionally deployed applications often have a tight coupling scenario between the application and infrastructure, which makes migration or replication to any other environment a big problem.

Kubernetes

Kubernetes is both a free and open-source container orchestration, offering abstracting the spleens created with traditional deployment. This is then followed by the basics of containerization and Kubernetes, which abstract the underlying infrastructure to enable applications to be put into lightweight, portable containers running consistently across all environments.

Here are some key advantages that Kubernetes brings to the table:

- Scalability and High Availability: Kubernetes automates the deployment, operations, and scaling of applications and manages application lifecycles across clouds. With capabilities such as auto-scaling and self-healing, it ensures that applications are always live, highly available, responsive to the user, under heavy load, and even in the case of node failure.

- Infrastructure Abstraction: Kubernetes abstracts from the infrastructure. This means applications are portable to cloud providers and on-premises data centers and portable to hybrid environments. Such an abstraction also leads to handling diversity more easily, concerning infrastructure components like storage, networking, and load balancing.

- Declarative Configuration: Kubernetes follows a declarative configuration model. Developers declare what they want the state to look like and how they want the application to behave, and Kubernetes enforces the underlying state. It is this declarative model that eases the management of applications and makes application workflow management consistent across environments.

- Service Discovery and Load Balancing: Kubernetes offers built-in service discovery and load balancing functions for applications, with these, applications can interact seamlessly, leaving no room for error, by distributing traffic across multiple replicas to ensure the best performance and resilience.

- Rolling Updates and Rollbacks: Apart from supporting high availability and scalability, Kubernetes takes care of rolling updates. This means that developers can update applications gradually without any downtime. This is very important since it enables easy rollbacks in the event an update is applied and causes issues, hence finally minimizing disruptive deployments.

- Self-Healing and Auto-Scaling: Kubernetes automatically checks the health of containers continuously with probes, and at any failure, it can be restarted or replaced automatically. A Kubernetes cluster can automatically scale applications with predefined metrics, both up and down, for perfect optimization of resources and lower cost.

- Robust Ecosystem: This is one of the strongest parts of Kubernetes, an ecosystem of tools, plugins, integrations, and developers who can change and adjust the platform according to their needs.

Kubernetes brings in a set of new concepts and, compared to traditional deployments, into play a corresponding learning curve. While those benefits are true, it also makes a better choice in modern application development and deployment because of these very scalability, resilience, and operational efficiency improvements.

Conclusion

In conclusion, Kubernetes, together with containerization, represents a true paradigm shift in how modern applications are built, deployed, and managed. With these Kubernetes components and features automatic scaling of applications and powerful containerization and orchestration mechanisms, it brings flexibility, efficiency, and scalability to the next level within a software development organization.

As you continue on your journey of understanding Kubernetes, its deployment, clusters, etc., you can explore and expand more such cloud computing and technologies aspects by visiting the CloudZenia cloud service provider website.