Introduction

Terraform stands as a trailblazer in the realm of Infrastructure as Code (IaC) thanks to its robust features. With just a few clicks, it enables seamless provisioning of resources on any cloud platform.

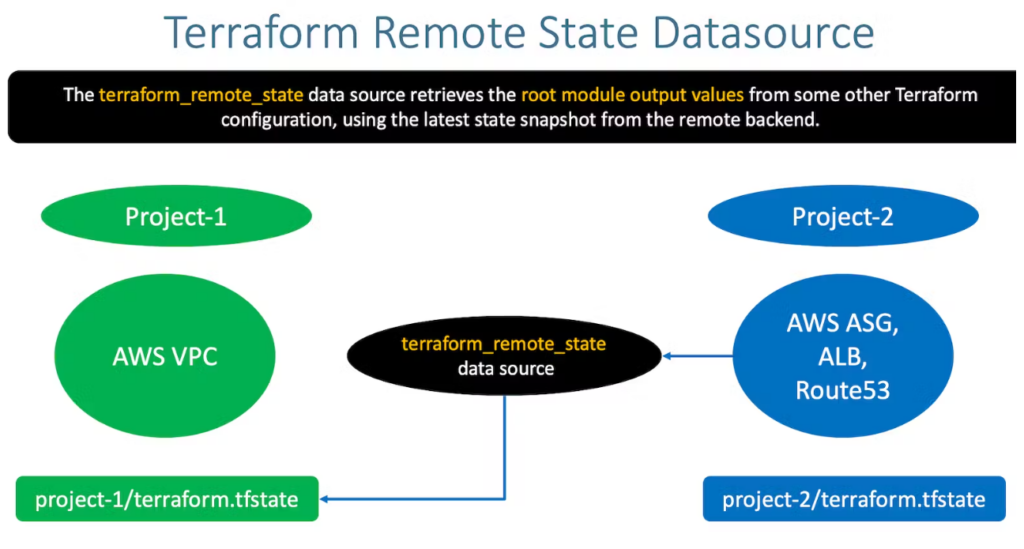

Following resource provisioning, Terraform encapsulates all pertinent details in a state file, commonly referred to as ‘tfstate.’ This file can be managed and stored either locally or remotely, fostering improved collaboration among engineers engaged in project development. This approach not only streamlines the process but also enhances error-free resource management.

Problem Statement

Suppose your organization’s development team initially creates a Virtual Private Cloud (VPC) to host ongoing projects, with all the necessary resources contained within for better separation. After analyzing the required resources, you write a Terraform script to provision them.

Later, a client presents a new idea, as often happens, and requests additional resources within the same VPC. How would you handle this situation?

Best Practices

Now, you can deal with the above-mentioned situation in two ways:

-

- Write a Terraform script for new resources and add it to the previous script. Here, you could make a few mistakes, so it is best to refer to the right attributes and dependencies of the tf files for the given resources.

- Create a new configuration file for the given resources and fetch the required attributes of previously created resources in AWS from the tfstate file.

Demo

Here, we are explaining all the above concepts to demonstrate a simple project in which we create one VPC and SG in the same configuration file and later create 2 EC2 instances in the same VPC and attach SG by referring to the remote state file.

Let’s get started!

Step-01: Cloning the Repository

Cloning the repository will allow us to create a local copy of our project hosted on a version control system, here we are using Git.

By executing the “git clone” command followed by the repository’s URL, we are retrieving the entire project, preserving its version history and enabling collaborative work, bug fixes, and feature development on their local machines.

Visit the below link for more about Terraform Data Sources:

GitHub Repo link

Step-02: Understanding the Script

Have a look at the backend.tf, this configuration stores our tfstate file in AWS S3.

In the given use case, we are using Terraform official modules for VPC and EC2 and calling them in main.tf; apart from this, we have written our own security group module to provision SG.

In the vpc.tf, we are calling the official VPC module and passing the required attributes, also called the SG module.

Step-03: Understanding ec2.tf

The first few lines of the script are for reading the state files; we should know about our tfstate files, like where it stores, their name and all the other required attributes.

Then we call the official EC2 module, and in VPC security group ID and subnet ID attributes, we use a remote state file to fetch this ID using data sources.

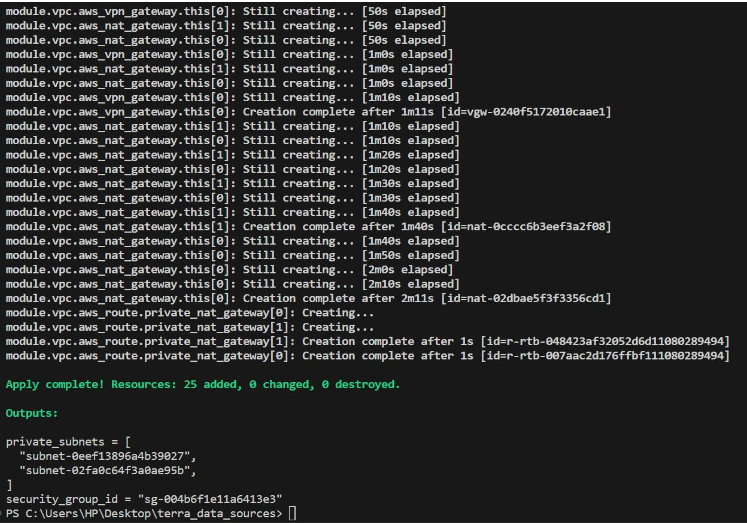

Step-04: Provisioning VPC and SG

Use the below command to provision resources in AWS:

terraform init

terraform plan

terraform apply

Note: Before applying this command, make sure to comment out the ec2.tf file, because in this script, we are reading the remote state file, but since it has not yet been created, it will give an error.

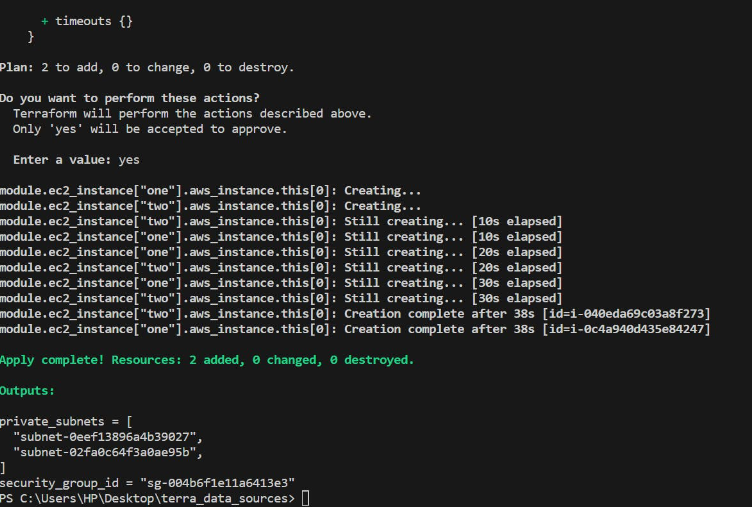

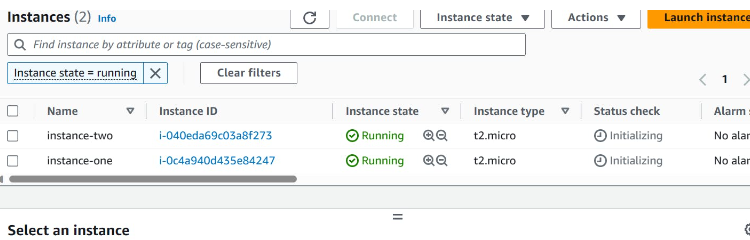

Step-05: Creating EC2 instance inside already provision VPC

Uncomment the ec2.tf file and run the terraform apply command:

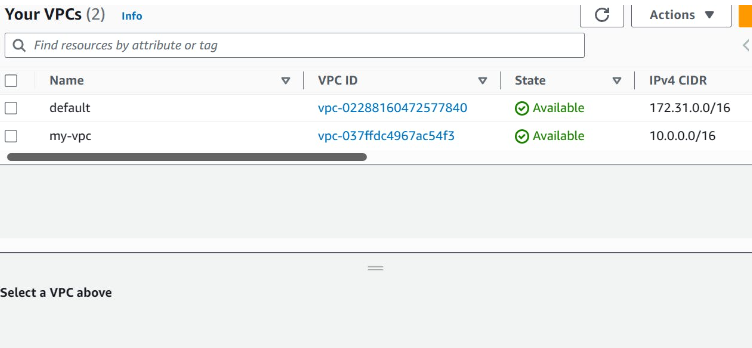

Step-06: Crosscheck the Resources on the AWS Console

And boom! You did it!

Conclusion

AWS Resource Data Sources is a valuable feature in Terraform that enables you to integrate external data into your infrastructure-as-code (IaC) workflows. They provide a flexible way to interact with existing resources, making it easier to manage complex infrastructure setups across various cloud and service providers.

Thanks for reading!

To get access to more such informational do-it-yourself blogs and practical tech advice, visit our website CloudZenia.

Happy Learning!

Leave a Reply